Assemble the Corpus

Building an LLM begins with assembling the corpus, the dataset on which the model is trained. Most of this data comes from web crawling, a process that systematically scans and collects content from the public internet. The corpus serves as the model's foundational knowledge base. But how exactly does this assembly process work?

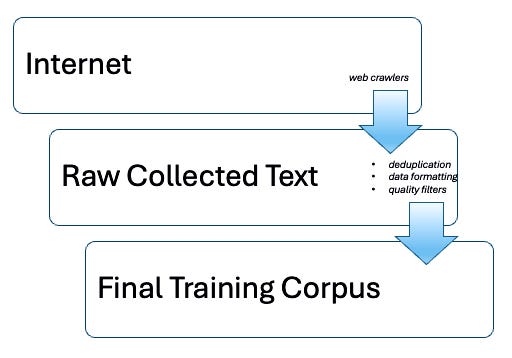

Assembling the corpus involves several key steps (Figure 1). First, web crawlers collect text from millions of websites, creating a vast repository of written material. Since much of this content is repetitive, a deduplication process removes redundant text. The collected data is then formatted to ensure consistent and efficient processing. The final dataset often contains hundreds of billions or even trillions of words.

The web-crawled data used to build large language models frequently comes from sources like Common Crawl, a non-profit project that regularly scans and archives vast portions of the Internet. Another widely used dataset is Google's C4 (Colossal Clean Crawled Corpus), which refines data from Common Crawl to improve quality, readability, and usefulness. These large-scale collections provide a diverse range of text from books, articles, forums, and other online sources.

Most large language models are trained on multilingual data, and many recent models are also multi-modal. Multi-modal models can process and generate not only text but also other forms of data such as images, audio, or video. While this book will not cover multi-modal architectures, the basic principles of language model training and functionality that we will explore apply similarly across these more complex systems. For simplicity, we will assume that the final dataset for our discussion is primarily in English. The end product can be conceptualized as a single massive text file, containing an extensive collection of written material that forms the basis for everything that follows in building the model.

It's important to recognize that a significant portion of publicly available data is problematic, containing misinformation, propaganda, bias, and harmful content such as misogyny and hate speech. Depending on the model, efforts are made to filter out low-quality or inappropriate material prior to training. However, for proprietary models, this filtering process is typically opaque, making it difficult to assess what content has been excluded and what biases may still remain in the final data set. This highlights a fundamental limitation: an LLM on its own has no intrinsic ability to distinguish truth from falsehood, nor high-quality data from low-quality data. The model learns patterns from whatever data it receives, making the quality and curation of the corpus critically important for the system's reliability and trustworthiness.